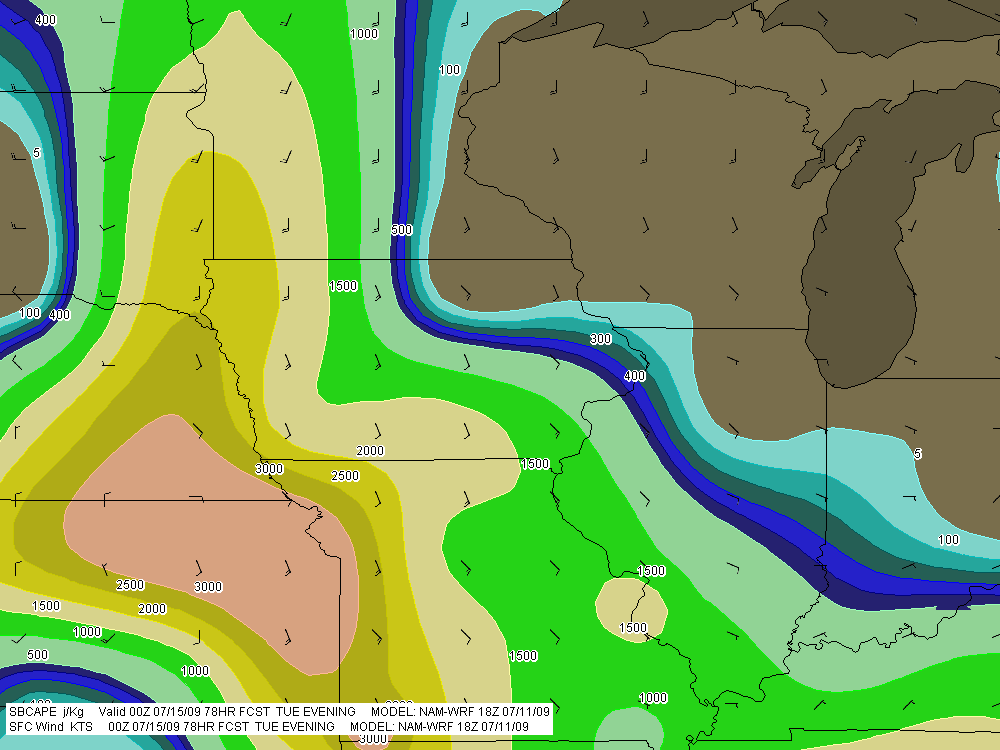

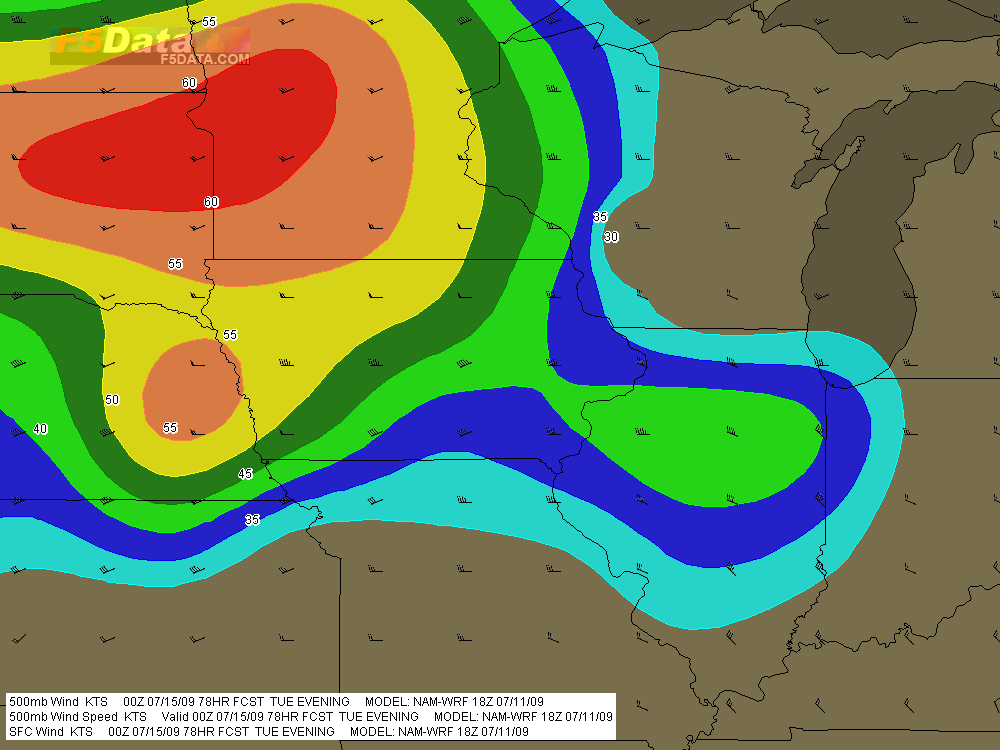

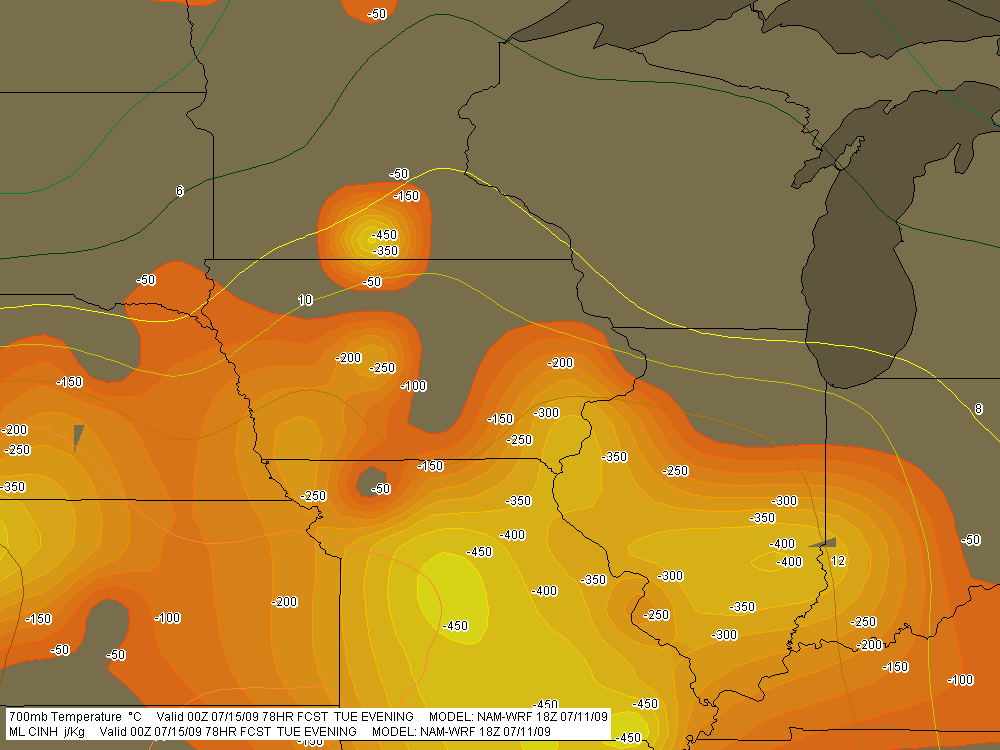

A significant weather event appears to be shaping up for the northern plains and cornbelt this coming Tuesday. For all you weather buffs and storm chasers, here are a few maps from the 18Z NAM-WRF run for 7 p.m. CT Tuesday night (technically, 00Z Wednesday), courtesy of F5 Data.

A couple items of note:

* The NAM-WRF is much less aggressive with capping than the GFS. The dark green 700mb isotherm that stretches diagonally through central Minnesota marks the 6 C contour, and the yellow line to its south is the 8 C isotherm.

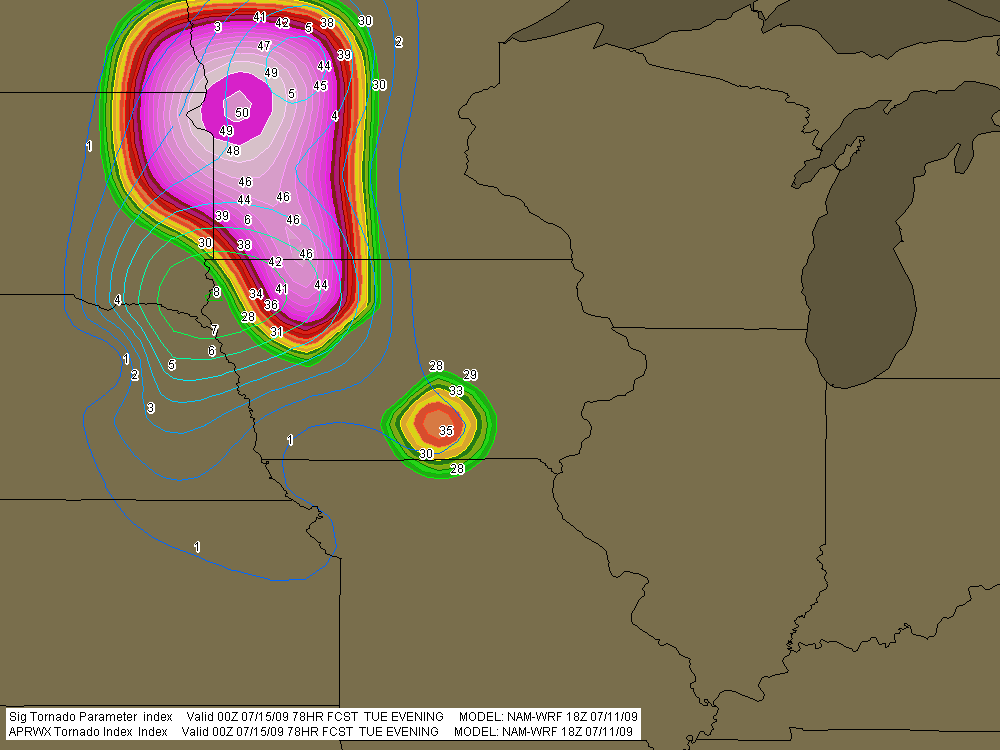

* The F5 Data proprietary APRWX Tornado Index shows a bullseye of 50, which is quite high (“Armageddon,” as F5 software creator Andy Revering puts it). The Significant Tornado Parameter is also pretty high, showing a tiny bullseye of 8 in extreme northwest Iowa by the Missouri River.

Obviously, all this will change from run to run. For now, it’s enough to say that there may be a chase opportunity shaping up for Tuesday.

As for Wednesday, well, we’ll see. The 12Z GFS earlier today showed good CAPE moving into the southern Great Lakes, but the surface winds were from the west, suggesting the usual linear junk we’re so used to. We’ve still got a few days, though, and anything can happen in that time.

SBCAPE in excess of 3,000 j/kg with nicely backed surface winds throughout much of region.

500mb winds with wind barbs.

MLCINH (shaded) and 700mb temperatures (contours).

APRWX Tornado Index (shaded) and STP (contours). Note the exceedingly high APRWX bullseye.